Large Language Models (LLMs) like GPT-4, Claude, Mistral, and open-source alternatives are transforming the way we build applications. They’re powering chatbots, copilots, retrieval systems, autonomous agents, and enterprise search — quickly becoming central to everything from productivity tools to customer-facing platforms. But with that innovation comes a new generation of risks — subtle, high-impact vulnerabilities that don’t exist in traditional software architectures. We’re entering a world where inputs look like language, exploits hide inside documents, and attackers don’t need code access to compromise your system.

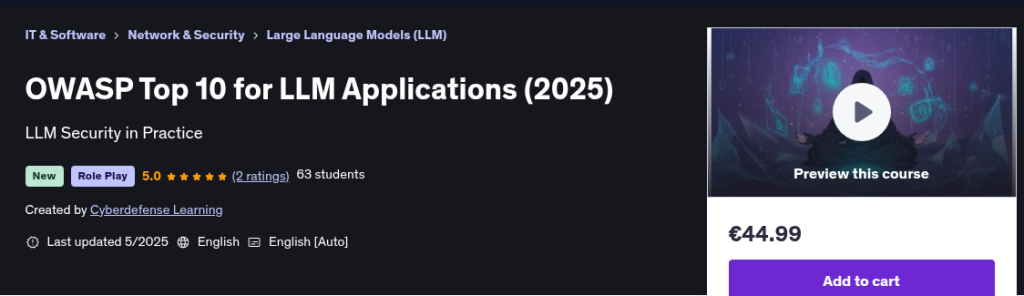

Syllabus

- Introduction to LLM Application Security

- Prompt Injection

- Sensitive Information Disclosure

- Supply Chain

- Data and Model Poisoning

- Improper Output Handling

- Excessive Agency

- System Prompt Leakage

- Vector and Embedding Weaknesses

- Misinformation

- Unbounded Consumption

- Best Practices and Future Trends in LLM Security